The development of 3D models in geotechnical, geological and environmental projects

Introduction

Did you know that almost 70% of the geological, geotechnical, and environmental reports do not adequately describe the field's conditions?

Billions of dollars are spent annually in field investigations, but the results usually do not provide the expected benefits to the projects they are a target for.

When working in the geotechnical investigations of the Itumbiara Dam in Brazil, the American Geologist John George Cabrera designed a borehole program to determine the location of a sheared zone in the foundations. The rocks composed of amphibole-gneiss, biotite-gneiss, and quartzites underwent intense tectonism. Therefore, it was required to know the precise distribution of the sheared zone to grout fractures cost-effectively and, more importantly, to ensure the foundation's safety.

Using the geophysical and boreholes information, the project team estimated the grouting volume and added 20% as a factor of safety. Surprisingly, by the end of the process, the grout amount was almost 50% more than expected.

The experienced team of geologists coordinated by Mr. Cabrera decided to investigate the reasons for the grout volume discrepancy. They wanted to ensure that the adopted procedures were adequate because the foundations would support massive loads, and the results would help better estimates in future projects and back new investigation methodologies.

After reviewing the documentation and technical reports, analyzing and reclassifying all the core boreholes, and performing more detailed cross-sections and measurements in the field, the team of geologists coordinated by Mr. Cabrera found the calculated grouting volume 5% less than initially obtained. Consequently, there were no errors with the investigations, data, processes, or reports.

What was wrong?

This article will answer this question, describe the legacy modeling techniques, how they evolved, and the innovative technologies that made 3D modeling the best alternative for an accurate understanding of geological, geotechnical, and environmental issues.

Methods of investigation

Since the early days, the geological, geotechnical, and environmental investigations of a site relied on inspections in situ and gathering samples for analysis, understanding what was on the Earth's surface, and deducting what laid underground. Moreover, it was necessary to comprehend the genesis of the structures and morphologies, proposing theories about how they evolved ending up in the existent scenery. Furthermore, geologists and engineers needed to determine the stress and strain distribution for construction purposes to design safe and cost-effective structures. The Earth is a complex entity and presents many challenges for these tasks, so scientists developed many methods and tools to disclose its intricacy.

The procedures of these investigations included data collection using aerial imagery, geological/geotechnical mapping, geophysical surveys, subsurface explorations, testing of materials in situ and the laboratory, and many other methods to obtain information. All these data by themselves do not help unless it statistically represents the region under investigation. However, collecting good quality data is expensive, and the project's budget is a limitation to the amount of information incorporated for analysis. The main reason is that the stakeholders often do not realize that saving resources in the early phases affect safety factors. Consequently, they will spend more resources later to guarantee the security or, worse, mitigate complications.

Nonetheless, using suitable investigation methods and ensuring that the data represents the site well does not guarantee success when taking the project's decisions. Therefore, it is necessary to have all the data well integrated with connected references permitting adequate conclusions in favor of design.

3D- Model

Data Integration

What was wrong with the conclusions of Mr. Cabrera's team?

In projects of significant magnitude, geoscientists deal with a massive amount of information. All these data, when individually analyzed, provide insight about their specific attributes but nothing concerning their spatial variability and interaction. They are a variety of datasets ranging from geological maps, structural measurements, samples of rock and soil, various geophysical data, drill holes, to name a few.

The geologists and engineers executed the Itumbiara Dam project in the 1980s. They performed the integration of data manually, comparing cross-sections with profiles, georeferencing the data with almost no automation, and lacking many tools and techniques that nowadays are taken for granted. For this reason, a considerable amount of information has been lost, affecting the estimations.

For example, to correlate geophysical profiles and lithologic data from boreholes, cross-sections were necessary to match with the core classification. Also, to determine the effects of faults through their perpendicular directions, they compared RQD classifications between aligned cores. This process is very time-consuming and prone to many assessment mistakes. In addition, today, we know that there are several inherent limitations to the use of RQD. Rock Mass Rating (RMR) and Q-system are classification methods that play substantial roles in rock mechanics design and now incorporate RQD.

In addition, on those days, measurements were usually taken post-boxing instead of upon exposure, a procedure that creates fractures lowering RQD. Furthermore, it was common to consider incipient discontinuities with tensile strength in gneiss and quartzite in the classification, decreasing this index. Finally, a vital source of discrepancy in core-logged RQD derived from many professionals neglecting the criterion hard and sound.

Regulations and good practices addressed some of these issues, but unquestionably, automation is the milestone for a better understanding of sites. It leads to better evaluation and analysis of data and successive assessment and models regeneration with various input parameters.

3D-Fault Model

3D Models

The interest in using 3D models to visualize and resolve construction problems exists since the 1970s. Earlier than that, the entrepreneur created models for marketing purposes in showrooms and expositions. They built the models in plastic, wood, or other materials proper to assemble. With the availability of powerful computers, software development to model was a step ahead, so CAD (Computer Aid Design) widespread in the construction industry in the 1980s. New technologies and even stronger computers made 3d modeling possible, and during the last decade, BIM(Building Information Modeling) matured into the technology we know today.

BIM is not a single software; it is a concept structured in levels. For example, level 0 is paper-based drawings with zero collaboration; Level 1 includes 2D construction drawings and some 3d modeling; Level 3 teams work with a shared 3D model; Levels 4, 5, and 6 add-in scheduling, cost, and sustainability information. Each level requires software with more features.

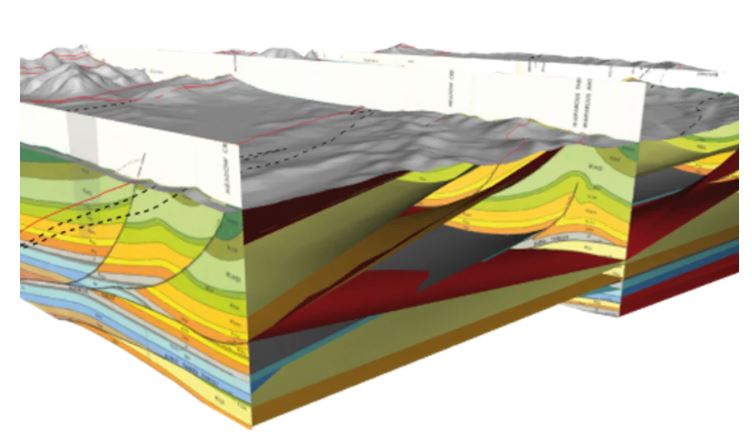

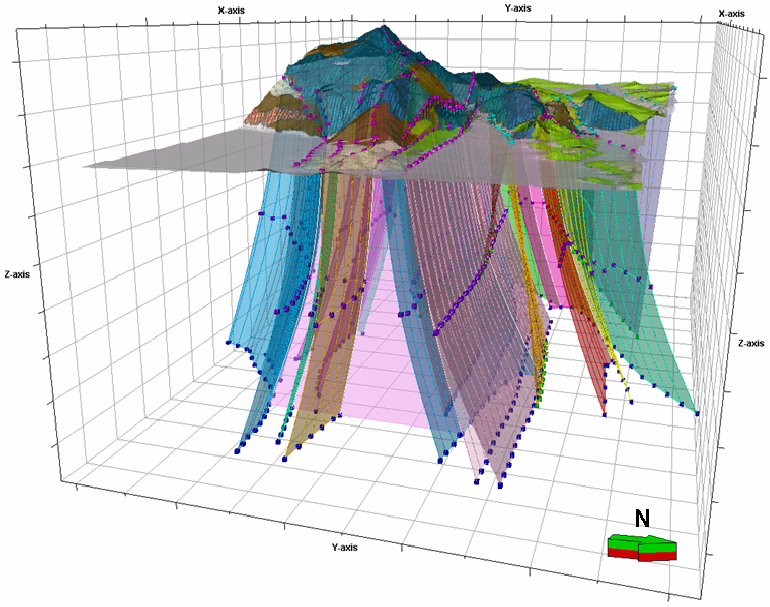

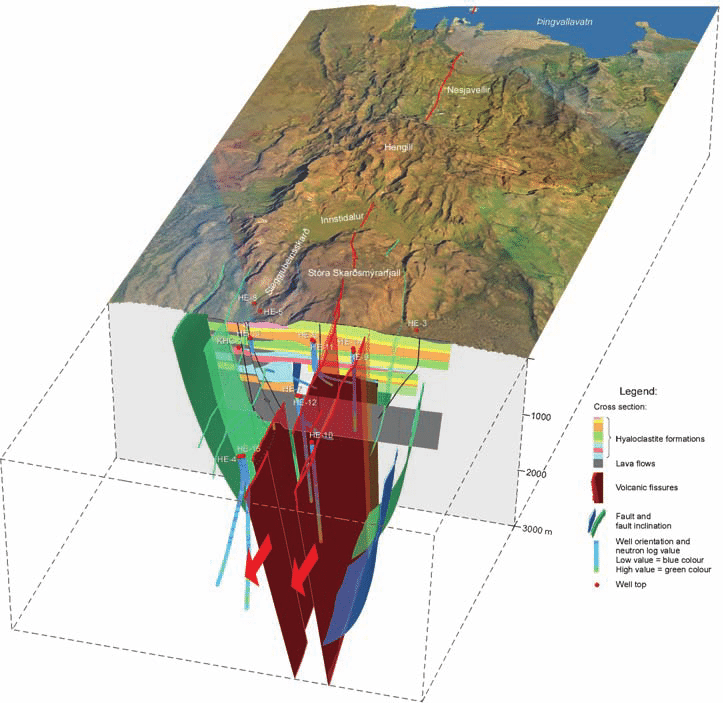

The extrapolation of the BIM concept to geological, geotechnical, and environmental fields is natural, like in other industries that require 3D interpretation to find, understand and solve problems. However, because these fields deal with materials of nature, the software complexities are higher. For example, geological models often integrate various datasets such as 2d, and 3D interpreted seismic surveys, well logs, processed gravity, magnetic surveys, and borehole data. Different formats represent this data, but internally the software makes the conversions, so once they are georeferenced, their interconnections can be established.

Flow of Hot Geothermal Fluids

The software contains algorithms to generate the models, using surfaces to define faults and lithologies. It calculates fault blocks and intermediate surfaces based on another surface reference and model vein systems that curve, fold and bifurcate. When applying structural data, it influences the geometry of surfaces, defining their relationships in the system of faults. Also, it generates isosurface from drill holes and data points, permitting the visualization of trends and surface continuity. In addition, it automatically generates cross-sections and slices, adding transparency to internal visualization.

Many features are already available, such as saving 3D scenes, resource estimation, and rendering images to include in reports.

However, the most significant improvement with automation to create 3D modeling software is the data centralization of resources in the Cloud. It simplifies the collaboration between the project team and stakeholders and enhances projects' progress and reliability. There is still more room for improvement, but 3D modeling is already a reality for many geological, geotechnical, and environmental projects.